Creating a Manager-Based Base Environment#

Environments bring together different aspects of the simulation such as

the scene, observations and actions spaces, reset events etc. to create a

coherent interface for various applications. In Isaac Lab, manager-based environments are

implemented as envs.ManagerBasedEnv and envs.ManagerBasedRLEnv classes.

The two classes are very similar, but envs.ManagerBasedRLEnv is useful for

reinforcement learning tasks and contains rewards, terminations, curriculum

and command generation. The envs.ManagerBasedEnv class is useful for

traditional robot control and doesn’t contain rewards and terminations.

In this tutorial, we will look at the base class envs.ManagerBasedEnv and its

corresponding configuration class envs.ManagerBasedEnvCfg for the manager-based workflow.

We will use the

cartpole environment from earlier to illustrate the different components

in creating a new envs.ManagerBasedEnv environment.

The Code#

The tutorial corresponds to the create_cartpole_base_env script in the scripts/tutorials/03_envs

directory.

Code for create_cartpole_base_env.py

1# Copyright (c) 2022-2026, The Isaac Lab Project Developers (https://github.com/isaac-sim/IsaacLab/blob/main/CONTRIBUTORS.md).

2# All rights reserved.

3#

4# SPDX-License-Identifier: BSD-3-Clause

5

6"""

7This script demonstrates how to create a simple environment with a cartpole. It combines the concepts of

8scene, action, observation and event managers to create an environment.

9

10.. code-block:: bash

11

12 ./isaaclab.sh -p scripts/tutorials/03_envs/create_cartpole_base_env.py --num_envs 32

13

14"""

15

16"""Launch Isaac Sim Simulator first."""

17

18

19import argparse

20

21from isaaclab.app import AppLauncher

22

23# add argparse arguments

24parser = argparse.ArgumentParser(description="Tutorial on creating a cartpole base environment.")

25parser.add_argument("--num_envs", type=int, default=16, help="Number of environments to spawn.")

26

27# append AppLauncher cli args

28AppLauncher.add_app_launcher_args(parser)

29# parse the arguments

30args_cli = parser.parse_args()

31

32# launch omniverse app

33app_launcher = AppLauncher(args_cli)

34simulation_app = app_launcher.app

35

36"""Rest everything follows."""

37

38import math

39

40import torch

41

42import isaaclab.envs.mdp as mdp

43from isaaclab.envs import ManagerBasedEnv, ManagerBasedEnvCfg

44from isaaclab.managers import EventTermCfg as EventTerm

45from isaaclab.managers import ObservationGroupCfg as ObsGroup

46from isaaclab.managers import ObservationTermCfg as ObsTerm

47from isaaclab.managers import SceneEntityCfg

48from isaaclab.utils import configclass

49

50from isaaclab_tasks.manager_based.classic.cartpole.cartpole_env_cfg import CartpoleSceneCfg

51

52

53@configclass

54class ActionsCfg:

55 """Action specifications for the environment."""

56

57 joint_efforts = mdp.JointEffortActionCfg(asset_name="robot", joint_names=["slider_to_cart"], scale=5.0)

58

59

60@configclass

61class ObservationsCfg:

62 """Observation specifications for the environment."""

63

64 @configclass

65 class PolicyCfg(ObsGroup):

66 """Observations for policy group."""

67

68 # observation terms (order preserved)

69 joint_pos_rel = ObsTerm(func=mdp.joint_pos_rel)

70 joint_vel_rel = ObsTerm(func=mdp.joint_vel_rel)

71

72 def __post_init__(self) -> None:

73 self.enable_corruption = False

74 self.concatenate_terms = True

75

76 # observation groups

77 policy: PolicyCfg = PolicyCfg()

78

79

80@configclass

81class EventCfg:

82 """Configuration for events."""

83

84 # on startup

85 add_pole_mass = EventTerm(

86 func=mdp.randomize_rigid_body_mass,

87 mode="startup",

88 params={

89 "asset_cfg": SceneEntityCfg("robot", body_names=["pole"]),

90 "mass_distribution_params": (0.1, 0.5),

91 "operation": "add",

92 },

93 )

94

95 # on reset

96 reset_cart_position = EventTerm(

97 func=mdp.reset_joints_by_offset,

98 mode="reset",

99 params={

100 "asset_cfg": SceneEntityCfg("robot", joint_names=["slider_to_cart"]),

101 "position_range": (-1.0, 1.0),

102 "velocity_range": (-0.1, 0.1),

103 },

104 )

105

106 reset_pole_position = EventTerm(

107 func=mdp.reset_joints_by_offset,

108 mode="reset",

109 params={

110 "asset_cfg": SceneEntityCfg("robot", joint_names=["cart_to_pole"]),

111 "position_range": (-0.125 * math.pi, 0.125 * math.pi),

112 "velocity_range": (-0.01 * math.pi, 0.01 * math.pi),

113 },

114 )

115

116

117@configclass

118class CartpoleEnvCfg(ManagerBasedEnvCfg):

119 """Configuration for the cartpole environment."""

120

121 # Scene settings

122 scene = CartpoleSceneCfg(num_envs=1024, env_spacing=2.5)

123 # Basic settings

124 observations = ObservationsCfg()

125 actions = ActionsCfg()

126 events = EventCfg()

127

128 def __post_init__(self):

129 """Post initialization."""

130 # viewer settings

131 self.viewer.eye = [4.5, 0.0, 6.0]

132 self.viewer.lookat = [0.0, 0.0, 2.0]

133 # step settings

134 self.decimation = 4 # env step every 4 sim steps: 200Hz / 4 = 50Hz

135 # simulation settings

136 self.sim.dt = 0.005 # sim step every 5ms: 200Hz

137

138

139def main():

140 """Main function."""

141 # parse the arguments

142 env_cfg = CartpoleEnvCfg()

143 env_cfg.scene.num_envs = args_cli.num_envs

144 env_cfg.sim.device = args_cli.device

145 # setup base environment

146 env = ManagerBasedEnv(cfg=env_cfg)

147

148 # simulate physics

149 count = 0

150 while simulation_app.is_running():

151 with torch.inference_mode():

152 # reset

153 if count % 300 == 0:

154 count = 0

155 env.reset()

156 print("-" * 80)

157 print("[INFO]: Resetting environment...")

158 # sample random actions

159 joint_efforts = torch.randn_like(env.action_manager.action)

160 # step the environment

161 obs, _ = env.step(joint_efforts)

162 # print current orientation of pole

163 print("[Env 0]: Pole joint: ", obs["policy"][0][1].item())

164 # update counter

165 count += 1

166

167 # close the environment

168 env.close()

169

170

171if __name__ == "__main__":

172 # run the main function

173 main()

174 # close sim app

175 simulation_app.close()

The Code Explained#

The base class envs.ManagerBasedEnv wraps around many intricacies of the simulation interaction

and provides a simple interface for the user to run the simulation and interact with it. It

is composed of the following components:

scene.InteractiveScene- The scene that is used for the simulation.managers.ActionManager- The manager that handles actions.managers.ObservationManager- The manager that handles observations.managers.EventManager- The manager that schedules operations (such as domain randomization) at specified simulation events. For instance, at startup, on resets, or periodic intervals.

By configuring these components, the user can create different variations of the same environment

with minimal effort. In this tutorial, we will go through the different components of the

envs.ManagerBasedEnv class and how to configure them to create a new environment.

Designing the scene#

The first step in creating a new environment is to configure its scene. For the cartpole environment, we will be using the scene from the previous tutorial. Thus, we omit the scene configuration here. For more details on how to configure a scene, see Using the Interactive Scene.

Defining actions#

In the previous tutorial, we directly input the action to the cartpole using

the assets.Articulation.set_joint_effort_target() method. In this tutorial, we will

use the managers.ActionManager to handle the actions.

The action manager can comprise of multiple managers.ActionTerm. Each action term

is responsible for applying control over a specific aspect of the environment. For instance,

for robotic arm, we can have two action terms – one for controlling the joints of the arm,

and the other for controlling the gripper. This composition allows the user to define

different control schemes for different aspects of the environment.

In the cartpole environment, we want to control the force applied to the cart to balance the pole. Thus, we will create an action term that controls the force applied to the cart.

@configclass

class ActionsCfg:

"""Action specifications for the environment."""

joint_efforts = mdp.JointEffortActionCfg(asset_name="robot", joint_names=["slider_to_cart"], scale=5.0)

Defining observations#

While the scene defines the state of the environment, the observations define the states

that are observable by the agent. These observations are used by the agent to make decisions

on what actions to take. In Isaac Lab, the observations are computed by the

managers.ObservationManager class.

Similar to the action manager, the observation manager can comprise of multiple observation terms. These are further grouped into observation groups which are used to define different observation spaces for the environment. For instance, for hierarchical control, we may want to define two observation groups – one for the low level controller and the other for the high level controller. It is assumed that all the observation terms in a group have the same dimensions.

For this tutorial, we will only define one observation group named "policy". While not completely

prescriptive, this group is a necessary requirement for various wrappers in Isaac Lab.

We define a group by inheriting from the managers.ObservationGroupCfg class. This class

collects different observation terms and help define common properties for the group, such

as enabling noise corruption or concatenating the observations into a single tensor.

The individual terms are defined by inheriting from the managers.ObservationTermCfg class.

This class takes in the managers.ObservationTermCfg.func that specifies the function or

callable class that computes the observation for that term. It includes other parameters for

defining the noise model, clipping, scaling, etc. However, we leave these parameters to their

default values for this tutorial.

@configclass

class ObservationsCfg:

"""Observation specifications for the environment."""

@configclass

class PolicyCfg(ObsGroup):

"""Observations for policy group."""

# observation terms (order preserved)

joint_pos_rel = ObsTerm(func=mdp.joint_pos_rel)

joint_vel_rel = ObsTerm(func=mdp.joint_vel_rel)

def __post_init__(self) -> None:

self.enable_corruption = False

self.concatenate_terms = True

# observation groups

policy: PolicyCfg = PolicyCfg()

Defining events#

At this point, we have defined the scene, actions and observations for the cartpole environment. The general idea for all these components is to define the configuration classes and then pass them to the corresponding managers. The event manager is no different.

The managers.EventManager class is responsible for events corresponding to changes

in the simulation state. This includes resetting (or randomizing) the scene, randomizing physical

properties (such as mass, friction, etc.), and varying visual properties (such as colors, textures, etc.).

Each of these are specified through the managers.EventTermCfg class, which

takes in the managers.EventTermCfg.func that specifies the function or callable

class that performs the event.

Additionally, it expects the mode of the event. The mode specifies when the event term should be applied.

It is possible to specify your own mode. For this, you’ll need to adapt the ManagerBasedEnv class.

However, out of the box, Isaac Lab provides three commonly used modes:

"startup"- Event that takes place only once at environment startup."reset"- Event that occurs on environment termination and reset."interval"- Event that are executed at a given interval, i.e., periodically after a certain number of steps.

For this example, we define events that randomize the pole’s mass on startup. This is done only once since this operation is expensive and we don’t want to do it on every reset. We also create an event to randomize the initial joint state of the cartpole and the pole at every reset.

@configclass

class EventCfg:

"""Configuration for events."""

# on startup

add_pole_mass = EventTerm(

func=mdp.randomize_rigid_body_mass,

mode="startup",

params={

"asset_cfg": SceneEntityCfg("robot", body_names=["pole"]),

"mass_distribution_params": (0.1, 0.5),

"operation": "add",

},

)

# on reset

reset_cart_position = EventTerm(

func=mdp.reset_joints_by_offset,

mode="reset",

params={

"asset_cfg": SceneEntityCfg("robot", joint_names=["slider_to_cart"]),

"position_range": (-1.0, 1.0),

"velocity_range": (-0.1, 0.1),

},

)

reset_pole_position = EventTerm(

func=mdp.reset_joints_by_offset,

mode="reset",

params={

"asset_cfg": SceneEntityCfg("robot", joint_names=["cart_to_pole"]),

"position_range": (-0.125 * math.pi, 0.125 * math.pi),

"velocity_range": (-0.01 * math.pi, 0.01 * math.pi),

},

)

Tying it all together#

Having defined the scene and manager configurations, we can now define the environment configuration

through the envs.ManagerBasedEnvCfg class. This class takes in the scene, action, observation and

event configurations.

In addition to these, it also takes in the envs.ManagerBasedEnvCfg.sim which defines the simulation

parameters such as the timestep, gravity, etc. This is initialized to the default values, but can

be modified as needed. We recommend doing so by defining the __post_init__() method in the

envs.ManagerBasedEnvCfg class, which is called after the configuration is initialized.

@configclass

class CartpoleEnvCfg(ManagerBasedEnvCfg):

"""Configuration for the cartpole environment."""

# Scene settings

scene = CartpoleSceneCfg(num_envs=1024, env_spacing=2.5)

# Basic settings

observations = ObservationsCfg()

actions = ActionsCfg()

events = EventCfg()

def __post_init__(self):

"""Post initialization."""

# viewer settings

self.viewer.eye = [4.5, 0.0, 6.0]

self.viewer.lookat = [0.0, 0.0, 2.0]

# step settings

self.decimation = 4 # env step every 4 sim steps: 200Hz / 4 = 50Hz

# simulation settings

self.sim.dt = 0.005 # sim step every 5ms: 200Hz

Running the simulation#

Lastly, we revisit the simulation execution loop. This is now much simpler since we have

abstracted away most of the details into the environment configuration. We only need to

call the envs.ManagerBasedEnv.reset() method to reset the environment and envs.ManagerBasedEnv.step()

method to step the environment. Both these functions return the observation and an info dictionary

which may contain additional information provided by the environment. These can be used by an

agent for decision-making.

The envs.ManagerBasedEnv class does not have any notion of terminations since that concept is

specific for episodic tasks. Thus, the user is responsible for defining the termination condition

for the environment. In this tutorial, we reset the simulation at regular intervals.

def main():

"""Main function."""

# parse the arguments

env_cfg = CartpoleEnvCfg()

env_cfg.scene.num_envs = args_cli.num_envs

env_cfg.sim.device = args_cli.device

# setup base environment

env = ManagerBasedEnv(cfg=env_cfg)

# simulate physics

count = 0

while simulation_app.is_running():

with torch.inference_mode():

# reset

if count % 300 == 0:

count = 0

env.reset()

print("-" * 80)

print("[INFO]: Resetting environment...")

# sample random actions

joint_efforts = torch.randn_like(env.action_manager.action)

# step the environment

obs, _ = env.step(joint_efforts)

# print current orientation of pole

print("[Env 0]: Pole joint: ", obs["policy"][0][1].item())

# update counter

count += 1

# close the environment

env.close()

An important thing to note above is that the entire simulation loop is wrapped inside the

torch.inference_mode() context manager. This is because the environment uses PyTorch

operations under-the-hood and we want to ensure that the simulation is not slowed down by

the overhead of PyTorch’s autograd engine and gradients are not computed for the simulation

operations.

The Code Execution#

To run the base environment made in this tutorial, you can use the following command:

./isaaclab.sh -p scripts/tutorials/03_envs/create_cartpole_base_env.py --num_envs 32

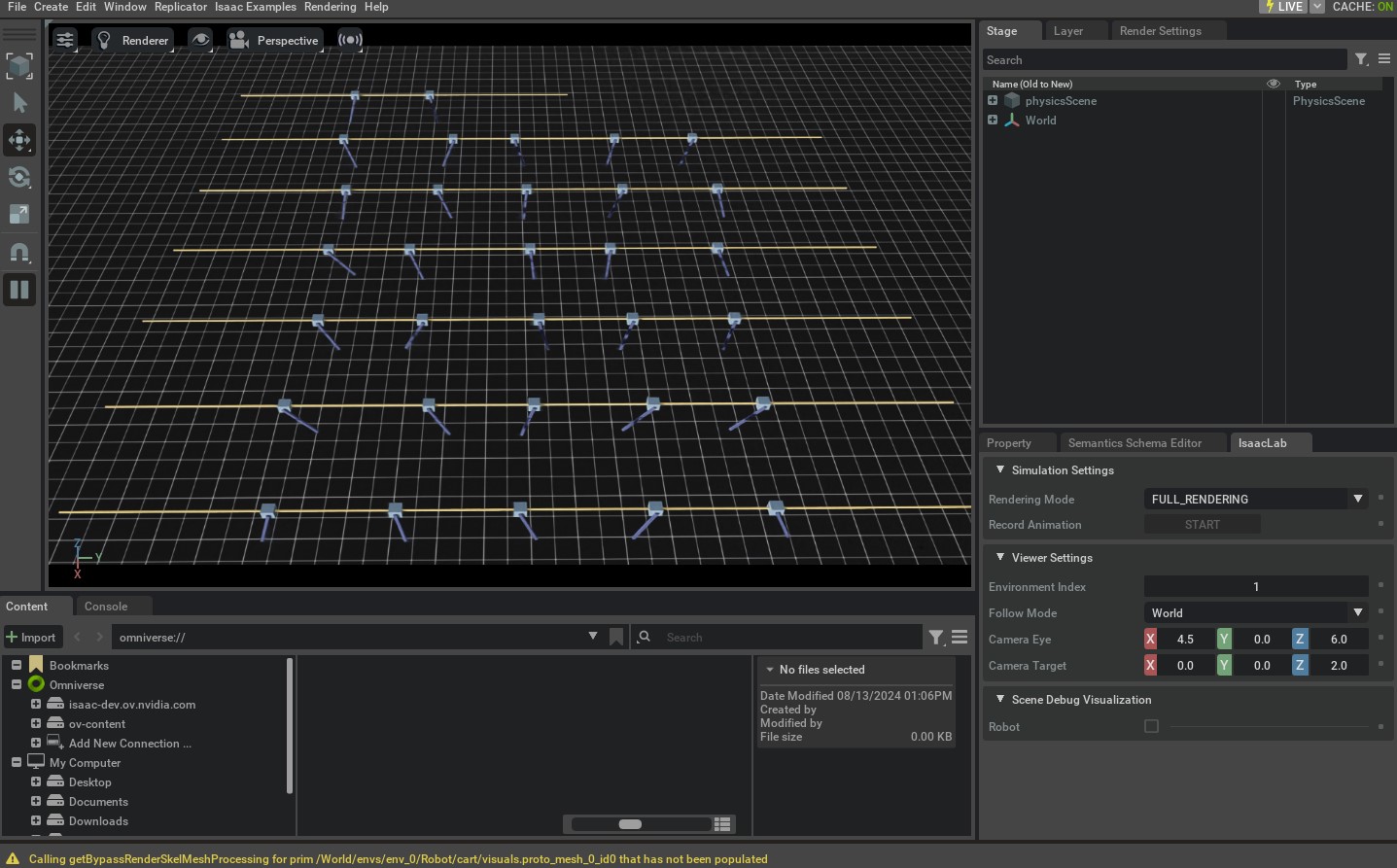

This should open a stage with a ground plane, light source, and cartpoles. The simulation should be

playing with random actions on the cartpole. Additionally, it opens a UI window on the bottom

right corner of the screen named "Isaac Lab". This window contains different UI elements that

can be used for debugging and visualization.

To stop the simulation, you can either close the window, or press Ctrl+C in the terminal where you

started the simulation.

In this tutorial, we learned about the different managers that help define a base environment. We

include more examples of defining the base environment in the scripts/tutorials/03_envs

directory. For completeness, they can be run using the following commands:

# Floating cube environment with custom action term for PD control

./isaaclab.sh -p scripts/tutorials/03_envs/create_cube_base_env.py --num_envs 32

# Quadrupedal locomotion environment with a policy that interacts with the environment

./isaaclab.sh -p scripts/tutorials/03_envs/create_quadruped_base_env.py --num_envs 32

In the following tutorial, we will look at the envs.ManagerBasedRLEnv class and how to use it

to create a Markovian Decision Process (MDP).