Using an operational space controller#

Sometimes, controlling the end-effector pose of the robot using a differential IK controller is not sufficient. For example, we might want to enforce a very specific pose tracking error dynamics in the task space, actuate the robot with joint effort/torque commands, or apply a contact force at a specific direction while controlling the motion of the other directions (e.g., washing the surface of the table with a cloth). In such tasks, we can use an operational space controller (OSC).

References for the operational space control:

O Khatib. A unified approach for motion and force control of robot manipulators: The operational space formulation. IEEE Journal of Robotics and Automation, 3(1):43–53, 1987. URL http://dx.doi.org/10.1109/JRA.1987.1087068.

Robot Dynamics Lecture Notes by Marco Hutter (ETH Zurich). URL https://ethz.ch/content/dam/ethz/special-interest/mavt/robotics-n-intelligent-systems/rsl-dam/documents/RobotDynamics2017/RD_HS2017script.pdf

In this tutorial, we will learn how to use an OSC to control the robot.

We will use the controllers.OperationalSpaceController class to apply a constant force perpendicular to a

tilted wall surface while tracking a desired end-effector pose in all the other directions.

The Code#

The tutorial corresponds to the run_osc.py script in the

scripts/tutorials/05_controllers directory.

Code for run_osc.py

1# Copyright (c) 2022-2026, The Isaac Lab Project Developers (https://github.com/isaac-sim/IsaacLab/blob/main/CONTRIBUTORS.md).

2# All rights reserved.

3#

4# SPDX-License-Identifier: BSD-3-Clause

5

6"""

7This script demonstrates how to use the operational space controller (OSC) with the simulator.

8

9The OSC controller can be configured in different modes. It uses the dynamical quantities such as Jacobians and

10mass matricescomputed by PhysX.

11

12.. code-block:: bash

13

14 # Usage

15 ./isaaclab.sh -p scripts/tutorials/05_controllers/run_osc.py

16

17"""

18

19"""Launch Isaac Sim Simulator first."""

20

21import argparse

22

23from isaaclab.app import AppLauncher

24

25# add argparse arguments

26parser = argparse.ArgumentParser(description="Tutorial on using the operational space controller.")

27parser.add_argument("--num_envs", type=int, default=128, help="Number of environments to spawn.")

28# append AppLauncher cli args

29AppLauncher.add_app_launcher_args(parser)

30# parse the arguments

31args_cli = parser.parse_args()

32

33# launch omniverse app

34app_launcher = AppLauncher(args_cli)

35simulation_app = app_launcher.app

36

37"""Rest everything follows."""

38

39import torch

40

41import isaaclab.sim as sim_utils

42from isaaclab.assets import Articulation, AssetBaseCfg

43from isaaclab.controllers import OperationalSpaceController, OperationalSpaceControllerCfg

44from isaaclab.markers import VisualizationMarkers

45from isaaclab.markers.config import FRAME_MARKER_CFG

46from isaaclab.scene import InteractiveScene, InteractiveSceneCfg

47from isaaclab.sensors import ContactSensorCfg

48from isaaclab.utils import configclass

49from isaaclab.utils.math import (

50 combine_frame_transforms,

51 matrix_from_quat,

52 quat_apply_inverse,

53 quat_inv,

54 subtract_frame_transforms,

55)

56

57##

58# Pre-defined configs

59##

60from isaaclab_assets import FRANKA_PANDA_HIGH_PD_CFG # isort:skip

61

62

63@configclass

64class SceneCfg(InteractiveSceneCfg):

65 """Configuration for a simple scene with a tilted wall."""

66

67 # ground plane

68 ground = AssetBaseCfg(

69 prim_path="/World/defaultGroundPlane",

70 spawn=sim_utils.GroundPlaneCfg(),

71 )

72

73 # lights

74 dome_light = AssetBaseCfg(

75 prim_path="/World/Light", spawn=sim_utils.DomeLightCfg(intensity=3000.0, color=(0.75, 0.75, 0.75))

76 )

77

78 # Tilted wall

79 tilted_wall = AssetBaseCfg(

80 prim_path="{ENV_REGEX_NS}/TiltedWall",

81 spawn=sim_utils.CuboidCfg(

82 size=(2.0, 1.5, 0.01),

83 collision_props=sim_utils.CollisionPropertiesCfg(),

84 visual_material=sim_utils.PreviewSurfaceCfg(diffuse_color=(1.0, 0.0, 0.0), opacity=0.1),

85 rigid_props=sim_utils.RigidBodyPropertiesCfg(kinematic_enabled=True),

86 activate_contact_sensors=True,

87 ),

88 init_state=AssetBaseCfg.InitialStateCfg(

89 pos=(0.6 + 0.085, 0.0, 0.3), rot=(0.9238795325, 0.0, -0.3826834324, 0.0)

90 ),

91 )

92

93 contact_forces = ContactSensorCfg(

94 prim_path="/World/envs/env_.*/TiltedWall",

95 update_period=0.0,

96 history_length=2,

97 debug_vis=False,

98 )

99

100 robot = FRANKA_PANDA_HIGH_PD_CFG.replace(prim_path="{ENV_REGEX_NS}/Robot")

101 robot.actuators["panda_shoulder"].stiffness = 0.0

102 robot.actuators["panda_shoulder"].damping = 0.0

103 robot.actuators["panda_forearm"].stiffness = 0.0

104 robot.actuators["panda_forearm"].damping = 0.0

105 robot.spawn.rigid_props.disable_gravity = True

106

107

108def run_simulator(sim: sim_utils.SimulationContext, scene: InteractiveScene):

109 """Runs the simulation loop.

110

111 Args:

112 sim: (SimulationContext) Simulation context.

113 scene: (InteractiveScene) Interactive scene.

114 """

115

116 # Extract scene entities for readability.

117 robot = scene["robot"]

118 contact_forces = scene["contact_forces"]

119

120 # Obtain indices for the end-effector and arm joints

121 ee_frame_name = "panda_leftfinger"

122 arm_joint_names = ["panda_joint.*"]

123 ee_frame_idx = robot.find_bodies(ee_frame_name)[0][0]

124 arm_joint_ids = robot.find_joints(arm_joint_names)[0]

125

126 # Create the OSC

127 osc_cfg = OperationalSpaceControllerCfg(

128 target_types=["pose_abs", "wrench_abs"],

129 impedance_mode="variable_kp",

130 inertial_dynamics_decoupling=True,

131 partial_inertial_dynamics_decoupling=False,

132 gravity_compensation=False,

133 motion_damping_ratio_task=1.0,

134 contact_wrench_stiffness_task=[0.0, 0.0, 0.1, 0.0, 0.0, 0.0],

135 motion_control_axes_task=[1, 1, 0, 1, 1, 1],

136 contact_wrench_control_axes_task=[0, 0, 1, 0, 0, 0],

137 nullspace_control="position",

138 )

139 osc = OperationalSpaceController(osc_cfg, num_envs=scene.num_envs, device=sim.device)

140

141 # Markers

142 frame_marker_cfg = FRAME_MARKER_CFG.copy()

143 frame_marker_cfg.markers["frame"].scale = (0.1, 0.1, 0.1)

144 ee_marker = VisualizationMarkers(frame_marker_cfg.replace(prim_path="/Visuals/ee_current"))

145 goal_marker = VisualizationMarkers(frame_marker_cfg.replace(prim_path="/Visuals/ee_goal"))

146

147 # Define targets for the arm

148 ee_goal_pose_set_tilted_b = torch.tensor(

149 [

150 [0.6, 0.15, 0.3, 0.0, 0.92387953, 0.0, 0.38268343],

151 [0.6, -0.3, 0.3, 0.0, 0.92387953, 0.0, 0.38268343],

152 [0.8, 0.0, 0.5, 0.0, 0.92387953, 0.0, 0.38268343],

153 ],

154 device=sim.device,

155 )

156 ee_goal_wrench_set_tilted_task = torch.tensor(

157 [

158 [0.0, 0.0, 10.0, 0.0, 0.0, 0.0],

159 [0.0, 0.0, 10.0, 0.0, 0.0, 0.0],

160 [0.0, 0.0, 10.0, 0.0, 0.0, 0.0],

161 ],

162 device=sim.device,

163 )

164 kp_set_task = torch.tensor(

165 [

166 [360.0, 360.0, 360.0, 360.0, 360.0, 360.0],

167 [420.0, 420.0, 420.0, 420.0, 420.0, 420.0],

168 [320.0, 320.0, 320.0, 320.0, 320.0, 320.0],

169 ],

170 device=sim.device,

171 )

172 ee_target_set = torch.cat([ee_goal_pose_set_tilted_b, ee_goal_wrench_set_tilted_task, kp_set_task], dim=-1)

173

174 # Define simulation stepping

175 sim_dt = sim.get_physics_dt()

176

177 # Update existing buffers

178 # Note: We need to update buffers before the first step for the controller.

179 robot.update(dt=sim_dt)

180

181 # Get the center of the robot soft joint limits

182 joint_centers = torch.mean(robot.data.soft_joint_pos_limits[:, arm_joint_ids, :], dim=-1)

183

184 # get the updated states

185 (

186 jacobian_b,

187 mass_matrix,

188 gravity,

189 ee_pose_b,

190 ee_vel_b,

191 root_pose_w,

192 ee_pose_w,

193 ee_force_b,

194 joint_pos,

195 joint_vel,

196 ) = update_states(sim, scene, robot, ee_frame_idx, arm_joint_ids, contact_forces)

197

198 # Track the given target command

199 current_goal_idx = 0 # Current goal index for the arm

200 command = torch.zeros(

201 scene.num_envs, osc.action_dim, device=sim.device

202 ) # Generic target command, which can be pose, position, force, etc.

203 ee_target_pose_b = torch.zeros(scene.num_envs, 7, device=sim.device) # Target pose in the body frame

204 ee_target_pose_w = torch.zeros(scene.num_envs, 7, device=sim.device) # Target pose in the world frame (for marker)

205

206 # Set joint efforts to zero

207 zero_joint_efforts = torch.zeros(scene.num_envs, robot.num_joints, device=sim.device)

208 joint_efforts = torch.zeros(scene.num_envs, len(arm_joint_ids), device=sim.device)

209

210 count = 0

211 # Simulation loop

212 while simulation_app.is_running():

213 # reset every 500 steps

214 if count % 500 == 0:

215 # reset joint state to default

216 default_joint_pos = robot.data.default_joint_pos.clone()

217 default_joint_vel = robot.data.default_joint_vel.clone()

218 robot.write_joint_state_to_sim(default_joint_pos, default_joint_vel)

219 robot.set_joint_effort_target(zero_joint_efforts) # Set zero torques in the initial step

220 robot.write_data_to_sim()

221 robot.reset()

222 # reset contact sensor

223 contact_forces.reset()

224 # reset target pose

225 robot.update(sim_dt)

226 _, _, _, ee_pose_b, _, _, _, _, _, _ = update_states(

227 sim, scene, robot, ee_frame_idx, arm_joint_ids, contact_forces

228 ) # at reset, the jacobians are not updated to the latest state

229 command, ee_target_pose_b, ee_target_pose_w, current_goal_idx = update_target(

230 sim, scene, osc, root_pose_w, ee_target_set, current_goal_idx

231 )

232 # set the osc command

233 osc.reset()

234 command, task_frame_pose_b = convert_to_task_frame(osc, command=command, ee_target_pose_b=ee_target_pose_b)

235 osc.set_command(command=command, current_ee_pose_b=ee_pose_b, current_task_frame_pose_b=task_frame_pose_b)

236 else:

237 # get the updated states

238 (

239 jacobian_b,

240 mass_matrix,

241 gravity,

242 ee_pose_b,

243 ee_vel_b,

244 root_pose_w,

245 ee_pose_w,

246 ee_force_b,

247 joint_pos,

248 joint_vel,

249 ) = update_states(sim, scene, robot, ee_frame_idx, arm_joint_ids, contact_forces)

250 # compute the joint commands

251 joint_efforts = osc.compute(

252 jacobian_b=jacobian_b,

253 current_ee_pose_b=ee_pose_b,

254 current_ee_vel_b=ee_vel_b,

255 current_ee_force_b=ee_force_b,

256 mass_matrix=mass_matrix,

257 gravity=gravity,

258 current_joint_pos=joint_pos,

259 current_joint_vel=joint_vel,

260 nullspace_joint_pos_target=joint_centers,

261 )

262 # apply actions

263 robot.set_joint_effort_target(joint_efforts, joint_ids=arm_joint_ids)

264 robot.write_data_to_sim()

265

266 # update marker positions

267 ee_marker.visualize(ee_pose_w[:, 0:3], ee_pose_w[:, 3:7])

268 goal_marker.visualize(ee_target_pose_w[:, 0:3], ee_target_pose_w[:, 3:7])

269

270 # perform step

271 sim.step(render=True)

272 # update robot buffers

273 robot.update(sim_dt)

274 # update buffers

275 scene.update(sim_dt)

276 # update sim-time

277 count += 1

278

279

280# Update robot states

281def update_states(

282 sim: sim_utils.SimulationContext,

283 scene: InteractiveScene,

284 robot: Articulation,

285 ee_frame_idx: int,

286 arm_joint_ids: list[int],

287 contact_forces,

288):

289 """Update the robot states.

290

291 Args:

292 sim: (SimulationContext) Simulation context.

293 scene: (InteractiveScene) Interactive scene.

294 robot: (Articulation) Robot articulation.

295 ee_frame_idx: (int) End-effector frame index.

296 arm_joint_ids: (list[int]) Arm joint indices.

297 contact_forces: (ContactSensor) Contact sensor.

298

299 Returns:

300 jacobian_b (torch.tensor): Jacobian in the body frame.

301 mass_matrix (torch.tensor): Mass matrix.

302 gravity (torch.tensor): Gravity vector.

303 ee_pose_b (torch.tensor): End-effector pose in the body frame.

304 ee_vel_b (torch.tensor): End-effector velocity in the body frame.

305 root_pose_w (torch.tensor): Root pose in the world frame.

306 ee_pose_w (torch.tensor): End-effector pose in the world frame.

307 ee_force_b (torch.tensor): End-effector force in the body frame.

308 joint_pos (torch.tensor): The joint positions.

309 joint_vel (torch.tensor): The joint velocities.

310

311 Raises:

312 ValueError: Undefined target_type.

313 """

314 # obtain dynamics related quantities from simulation

315 ee_jacobi_idx = ee_frame_idx - 1

316 jacobian_w = robot.root_physx_view.get_jacobians()[:, ee_jacobi_idx, :, arm_joint_ids]

317 mass_matrix = robot.root_physx_view.get_generalized_mass_matrices()[:, arm_joint_ids, :][:, :, arm_joint_ids]

318 gravity = robot.root_physx_view.get_gravity_compensation_forces()[:, arm_joint_ids]

319 # Convert the Jacobian from world to root frame

320 jacobian_b = jacobian_w.clone()

321 root_rot_matrix = matrix_from_quat(quat_inv(robot.data.root_quat_w))

322 jacobian_b[:, :3, :] = torch.bmm(root_rot_matrix, jacobian_b[:, :3, :])

323 jacobian_b[:, 3:, :] = torch.bmm(root_rot_matrix, jacobian_b[:, 3:, :])

324

325 # Compute current pose of the end-effector

326 root_pos_w = robot.data.root_pos_w

327 root_quat_w = robot.data.root_quat_w

328 ee_pos_w = robot.data.body_pos_w[:, ee_frame_idx]

329 ee_quat_w = robot.data.body_quat_w[:, ee_frame_idx]

330 ee_pos_b, ee_quat_b = subtract_frame_transforms(root_pos_w, root_quat_w, ee_pos_w, ee_quat_w)

331 root_pose_w = torch.cat([root_pos_w, root_quat_w], dim=-1)

332 ee_pose_w = torch.cat([ee_pos_w, ee_quat_w], dim=-1)

333 ee_pose_b = torch.cat([ee_pos_b, ee_quat_b], dim=-1)

334

335 # Compute the current velocity of the end-effector

336 ee_vel_w = robot.data.body_vel_w[:, ee_frame_idx, :] # Extract end-effector velocity in the world frame

337 root_vel_w = robot.data.root_vel_w # Extract root velocity in the world frame

338 relative_vel_w = ee_vel_w - root_vel_w # Compute the relative velocity in the world frame

339 ee_lin_vel_b = quat_apply_inverse(robot.data.root_quat_w, relative_vel_w[:, 0:3]) # From world to root frame

340 ee_ang_vel_b = quat_apply_inverse(robot.data.root_quat_w, relative_vel_w[:, 3:6])

341 ee_vel_b = torch.cat([ee_lin_vel_b, ee_ang_vel_b], dim=-1)

342

343 # Calculate the contact force

344 ee_force_w = torch.zeros(scene.num_envs, 3, device=sim.device)

345 sim_dt = sim.get_physics_dt()

346 contact_forces.update(sim_dt) # update contact sensor

347 # Calculate the contact force by averaging over last four time steps (i.e., to smoothen) and

348 # taking the max of three surfaces as only one should be the contact of interest

349 ee_force_w, _ = torch.max(torch.mean(contact_forces.data.net_forces_w_history, dim=1), dim=1)

350

351 # This is a simplification, only for the sake of testing.

352 ee_force_b = ee_force_w

353

354 # Get joint positions and velocities

355 joint_pos = robot.data.joint_pos[:, arm_joint_ids]

356 joint_vel = robot.data.joint_vel[:, arm_joint_ids]

357

358 return (

359 jacobian_b,

360 mass_matrix,

361 gravity,

362 ee_pose_b,

363 ee_vel_b,

364 root_pose_w,

365 ee_pose_w,

366 ee_force_b,

367 joint_pos,

368 joint_vel,

369 )

370

371

372# Update the target commands

373def update_target(

374 sim: sim_utils.SimulationContext,

375 scene: InteractiveScene,

376 osc: OperationalSpaceController,

377 root_pose_w: torch.tensor,

378 ee_target_set: torch.tensor,

379 current_goal_idx: int,

380):

381 """Update the targets for the operational space controller.

382

383 Args:

384 sim: (SimulationContext) Simulation context.

385 scene: (InteractiveScene) Interactive scene.

386 osc: (OperationalSpaceController) Operational space controller.

387 root_pose_w: (torch.tensor) Root pose in the world frame.

388 ee_target_set: (torch.tensor) End-effector target set.

389 current_goal_idx: (int) Current goal index.

390

391 Returns:

392 command (torch.tensor): Updated target command.

393 ee_target_pose_b (torch.tensor): Updated target pose in the body frame.

394 ee_target_pose_w (torch.tensor): Updated target pose in the world frame.

395 next_goal_idx (int): Next goal index.

396

397 Raises:

398 ValueError: Undefined target_type.

399 """

400

401 # update the ee desired command

402 command = torch.zeros(scene.num_envs, osc.action_dim, device=sim.device)

403 command[:] = ee_target_set[current_goal_idx]

404

405 # update the ee desired pose

406 ee_target_pose_b = torch.zeros(scene.num_envs, 7, device=sim.device)

407 for target_type in osc.cfg.target_types:

408 if target_type == "pose_abs":

409 ee_target_pose_b[:] = command[:, :7]

410 elif target_type == "wrench_abs":

411 pass # ee_target_pose_b could stay at the root frame for force control, what matters is ee_target_b

412 else:

413 raise ValueError("Undefined target_type within update_target().")

414

415 # update the target desired pose in world frame (for marker)

416 ee_target_pos_w, ee_target_quat_w = combine_frame_transforms(

417 root_pose_w[:, 0:3], root_pose_w[:, 3:7], ee_target_pose_b[:, 0:3], ee_target_pose_b[:, 3:7]

418 )

419 ee_target_pose_w = torch.cat([ee_target_pos_w, ee_target_quat_w], dim=-1)

420

421 next_goal_idx = (current_goal_idx + 1) % len(ee_target_set)

422

423 return command, ee_target_pose_b, ee_target_pose_w, next_goal_idx

424

425

426# Convert the target commands to the task frame

427def convert_to_task_frame(osc: OperationalSpaceController, command: torch.tensor, ee_target_pose_b: torch.tensor):

428 """Converts the target commands to the task frame.

429

430 Args:

431 osc: OperationalSpaceController object.

432 command: Command to be converted.

433 ee_target_pose_b: Target pose in the body frame.

434

435 Returns:

436 command (torch.tensor): Target command in the task frame.

437 task_frame_pose_b (torch.tensor): Target pose in the task frame.

438

439 Raises:

440 ValueError: Undefined target_type.

441 """

442 command = command.clone()

443 task_frame_pose_b = ee_target_pose_b.clone()

444

445 cmd_idx = 0

446 for target_type in osc.cfg.target_types:

447 if target_type == "pose_abs":

448 command[:, :3], command[:, 3:7] = subtract_frame_transforms(

449 task_frame_pose_b[:, :3], task_frame_pose_b[:, 3:], command[:, :3], command[:, 3:7]

450 )

451 cmd_idx += 7

452 elif target_type == "wrench_abs":

453 # These are already defined in target frame for ee_goal_wrench_set_tilted_task (since it is

454 # easier), so not transforming

455 cmd_idx += 6

456 else:

457 raise ValueError("Undefined target_type within _convert_to_task_frame().")

458

459 return command, task_frame_pose_b

460

461

462def main():

463 """Main function."""

464 # Load kit helper

465 sim_cfg = sim_utils.SimulationCfg(dt=0.01, device=args_cli.device)

466 sim = sim_utils.SimulationContext(sim_cfg)

467 # Set main camera

468 sim.set_camera_view([2.5, 2.5, 2.5], [0.0, 0.0, 0.0])

469 # Design scene

470 scene_cfg = SceneCfg(num_envs=args_cli.num_envs, env_spacing=2.0)

471 scene = InteractiveScene(scene_cfg)

472 # Play the simulator

473 sim.reset()

474 # Now we are ready!

475 print("[INFO]: Setup complete...")

476 # Run the simulator

477 run_simulator(sim, scene)

478

479

480if __name__ == "__main__":

481 # run the main function

482 main()

483 # close sim app

484 simulation_app.close()

Creating an Operational Space Controller#

The OperationalSpaceController class computes the joint

efforts/torques for a robot to do simultaneous motion and force control in task space.

The reference frame of this task space could be an arbitrary coordinate frame in Euclidean space. By default,

it is the robot’s base frame. However, in certain cases, it could be easier to define target coordinates w.r.t. a

different frame. In such cases, the pose of this task reference frame, w.r.t. to the robot’s base frame, should be

provided in the set_command method’s current_task_frame_pose_b argument. For example, in this tutorial, it

makes sense to define the target commands w.r.t. a frame that is parallel to the wall surface, as the force control

direction would be then only nonzero in the z-axis of this frame. The target pose, which is set to have the same

orientation as the wall surface, is such a candidate and is used as the task frame in this tutorial. Therefore, all

the arguments to the OperationalSpaceControllerCfg should be set with this task reference frame

in mind.

For the motion control, the task space targets could be given as absolute (i.e., defined w.r.t. the robot base,

target_types: "pose_abs") or relative the the end-effector’s current pose (i.e., target_types: "pose_rel").

For the force control, the task space targets could be given as absolute (i.e., defined w.r.t. the robot base,

target_types: "force_abs"). If it is desired to apply pose and force control simultaneously, the target_types

should be a list such as ["pose_abs", "wrench_abs"] or ["pose_rel", "wrench_abs"].

The axes that the motion and force control will be applied can be specified using the motion_control_axes_task and

force_control_axes_task arguments, respectively. These lists should consist of 0/1 for all six axes (position and

rotation) and be complementary to each other (e.g., for the x-axis, if the motion_control_axes_task is 0, the

force_control_axes_task should be 1).

For the motion control axes, desired stiffness, and damping ratio values can be specified using the

motion_control_stiffness and motion_damping_ratio_task arguments, which can be a scalar (same value for all

axes) or a list of six scalars, one value corresponding to each axis. If desired, the stiffness and damping ratio

values could be a command parameter (e.g., to learn the values using RL or change them on the go). For this,

impedance_mode should be either "variable_kp" to include the stiffness values within the command or

"variable" to include both the stiffness and damping ratio values. In these cases, motion_stiffness_limits_task

and motion_damping_limits_task should be set as well, which puts bounds on the stiffness and damping ratio values.

For contact force control, it is possible to apply an open-loop force control by not setting the

contact_wrench_stiffness_task, or apply a closed-loop force control (with the feed-forward term) by setting

the desired stiffness values using the contact_wrench_stiffness_task argument, which can be a scalar or a list

of six scalars. Please note that, currently, only the linear part of the contact wrench (hence the first three

elements of the contact_wrench_stiffness_task) is considered in the closed-loop control, as the rotational part

cannot be measured with the contact sensors.

For the motion control, inertial_dynamics_decoupling should be set to True to use the robot’s inertia matrix

to decouple the desired accelerations in the task space. This is important for the motion control to be accurate,

especially for rapid movements. This inertial decoupling accounts for the coupling between all the six motion axes.

If desired, the inertial coupling between the translational and rotational axes could be ignored by setting the

partial_inertial_dynamics_decoupling to True.

If it is desired to include the gravity compensation in the operational space command, the gravity_compensation

should be set to True.

A final consideration regarding the operational space control is what to do with the null-space of redundant robots.

The null-space is the subspace of the joint space that does not affect the task space coordinates. If nothing is done

to control the null-space, the robot joints will float without moving the end-effector. This might be undesired (e.g.,

the robot joints might get close to their limits), and one might want to control the robot behaviour within its

null-space. One way to do is to set nullspace_control to "position" (by default it is "none") which

integrates a null-space PD controller to attract the robot joints to desired targets without affecting the task

space. The behaviour of this null-space controller can be defined using the nullspace_stiffness and

nullspace_damping_ratio arguments. Please note that theoretical decoupling of the null-space and task space

accelerations is only possible when inertial_dynamics_decoupling is set to True and

partial_inertial_dynamics_decoupling is set to False.

The included OSC implementation performs the computation in a batched format and uses PyTorch operations.

In this tutorial, we will use "pose_abs" for controlling the motion in all axes except the z-axis and

"wrench_abs" for controlling the force in the z-axis. Moreover, we will include the full inertia decoupling in

the motion control and not include the gravity compensation, as the gravity is disabled from the robot configuration.

We set the impedance mode to "variable_kp" to dynamically change the stiffness values

(motion_damping_ratio_task is set to 1: the kd values adapt according to kp values to maintain a critically

damped response). Finally, nullspace_control is set to use "position" where the joint set points are provided

to be the center of the joint position limits.

# Create the OSC

osc_cfg = OperationalSpaceControllerCfg(

target_types=["pose_abs", "wrench_abs"],

impedance_mode="variable_kp",

inertial_dynamics_decoupling=True,

partial_inertial_dynamics_decoupling=False,

gravity_compensation=False,

motion_damping_ratio_task=1.0,

contact_wrench_stiffness_task=[0.0, 0.0, 0.1, 0.0, 0.0, 0.0],

motion_control_axes_task=[1, 1, 0, 1, 1, 1],

contact_wrench_control_axes_task=[0, 0, 1, 0, 0, 0],

nullspace_control="position",

)

osc = OperationalSpaceController(osc_cfg, num_envs=scene.num_envs, device=sim.device)

Updating the states of the robot#

The OSC implementation is a computation-only class. Thus, it expects the user to provide the necessary information about the robot. This includes the robot’s Jacobian matrix, mass/inertia matrix, end-effector pose, velocity, contact force (all in the root frame), and finally, the joint positions and velocities. Moreover, the user should provide gravity compensation vector and null-space joint position targets if required.

# Update robot states

def update_states(

sim: sim_utils.SimulationContext,

scene: InteractiveScene,

robot: Articulation,

ee_frame_idx: int,

arm_joint_ids: list[int],

contact_forces,

):

"""Update the robot states.

Args:

sim: (SimulationContext) Simulation context.

scene: (InteractiveScene) Interactive scene.

robot: (Articulation) Robot articulation.

ee_frame_idx: (int) End-effector frame index.

arm_joint_ids: (list[int]) Arm joint indices.

contact_forces: (ContactSensor) Contact sensor.

Returns:

jacobian_b (torch.tensor): Jacobian in the body frame.

mass_matrix (torch.tensor): Mass matrix.

gravity (torch.tensor): Gravity vector.

ee_pose_b (torch.tensor): End-effector pose in the body frame.

ee_vel_b (torch.tensor): End-effector velocity in the body frame.

root_pose_w (torch.tensor): Root pose in the world frame.

ee_pose_w (torch.tensor): End-effector pose in the world frame.

ee_force_b (torch.tensor): End-effector force in the body frame.

joint_pos (torch.tensor): The joint positions.

joint_vel (torch.tensor): The joint velocities.

Raises:

ValueError: Undefined target_type.

"""

# obtain dynamics related quantities from simulation

ee_jacobi_idx = ee_frame_idx - 1

jacobian_w = robot.root_physx_view.get_jacobians()[:, ee_jacobi_idx, :, arm_joint_ids]

mass_matrix = robot.root_physx_view.get_generalized_mass_matrices()[:, arm_joint_ids, :][:, :, arm_joint_ids]

gravity = robot.root_physx_view.get_gravity_compensation_forces()[:, arm_joint_ids]

# Convert the Jacobian from world to root frame

jacobian_b = jacobian_w.clone()

root_rot_matrix = matrix_from_quat(quat_inv(robot.data.root_quat_w))

jacobian_b[:, :3, :] = torch.bmm(root_rot_matrix, jacobian_b[:, :3, :])

jacobian_b[:, 3:, :] = torch.bmm(root_rot_matrix, jacobian_b[:, 3:, :])

# Compute current pose of the end-effector

root_pos_w = robot.data.root_pos_w

root_quat_w = robot.data.root_quat_w

ee_pos_w = robot.data.body_pos_w[:, ee_frame_idx]

ee_quat_w = robot.data.body_quat_w[:, ee_frame_idx]

ee_pos_b, ee_quat_b = subtract_frame_transforms(root_pos_w, root_quat_w, ee_pos_w, ee_quat_w)

root_pose_w = torch.cat([root_pos_w, root_quat_w], dim=-1)

ee_pose_w = torch.cat([ee_pos_w, ee_quat_w], dim=-1)

ee_pose_b = torch.cat([ee_pos_b, ee_quat_b], dim=-1)

# Compute the current velocity of the end-effector

ee_vel_w = robot.data.body_vel_w[:, ee_frame_idx, :] # Extract end-effector velocity in the world frame

root_vel_w = robot.data.root_vel_w # Extract root velocity in the world frame

relative_vel_w = ee_vel_w - root_vel_w # Compute the relative velocity in the world frame

ee_lin_vel_b = quat_apply_inverse(robot.data.root_quat_w, relative_vel_w[:, 0:3]) # From world to root frame

ee_ang_vel_b = quat_apply_inverse(robot.data.root_quat_w, relative_vel_w[:, 3:6])

ee_vel_b = torch.cat([ee_lin_vel_b, ee_ang_vel_b], dim=-1)

# Calculate the contact force

ee_force_w = torch.zeros(scene.num_envs, 3, device=sim.device)

sim_dt = sim.get_physics_dt()

contact_forces.update(sim_dt) # update contact sensor

# Calculate the contact force by averaging over last four time steps (i.e., to smoothen) and

# taking the max of three surfaces as only one should be the contact of interest

ee_force_w, _ = torch.max(torch.mean(contact_forces.data.net_forces_w_history, dim=1), dim=1)

# This is a simplification, only for the sake of testing.

ee_force_b = ee_force_w

# Get joint positions and velocities

joint_pos = robot.data.joint_pos[:, arm_joint_ids]

joint_vel = robot.data.joint_vel[:, arm_joint_ids]

return (

jacobian_b,

mass_matrix,

gravity,

ee_pose_b,

ee_vel_b,

root_pose_w,

ee_pose_w,

ee_force_b,

joint_pos,

joint_vel,

)

Computing robot command#

The OSC separates the operation of setting the desired command and computing the desired joint positions.

To set the desired command, the user should provide command vector, which includes the target commands

(i.e., in the order they appear in the target_types argument of the OSC configuration),

and the desired stiffness and damping ratio values if the impedance_mode is set to "variable_kp" or "variable".

They should be all in the same coordinate frame as the task frame (e.g., indicated with _task subscript) and

concatanated together.

In this tutorial, the desired wrench is already defined w.r.t. the task frame, and the desired pose is transformed to the task frame as the following:

# Convert the target commands to the task frame

def convert_to_task_frame(osc: OperationalSpaceController, command: torch.tensor, ee_target_pose_b: torch.tensor):

"""Converts the target commands to the task frame.

Args:

osc: OperationalSpaceController object.

command: Command to be converted.

ee_target_pose_b: Target pose in the body frame.

Returns:

command (torch.tensor): Target command in the task frame.

task_frame_pose_b (torch.tensor): Target pose in the task frame.

Raises:

ValueError: Undefined target_type.

"""

command = command.clone()

task_frame_pose_b = ee_target_pose_b.clone()

cmd_idx = 0

for target_type in osc.cfg.target_types:

if target_type == "pose_abs":

command[:, :3], command[:, 3:7] = subtract_frame_transforms(

task_frame_pose_b[:, :3], task_frame_pose_b[:, 3:], command[:, :3], command[:, 3:7]

)

cmd_idx += 7

elif target_type == "wrench_abs":

# These are already defined in target frame for ee_goal_wrench_set_tilted_task (since it is

# easier), so not transforming

cmd_idx += 6

else:

raise ValueError("Undefined target_type within _convert_to_task_frame().")

return command, task_frame_pose_b

The OSC command is set with the command vector in the task frame, the end-effector pose in the base frame, and the task (reference) frame pose in the base frame as the following. This information is needed, as the internal computations are done in the base frame.

# set the osc command

osc.reset()

command, task_frame_pose_b = convert_to_task_frame(osc, command=command, ee_target_pose_b=ee_target_pose_b)

osc.set_command(command=command, current_ee_pose_b=ee_pose_b, current_task_frame_pose_b=task_frame_pose_b)

The joint effort/torque values are computed using the provided robot states and the desired command as the following:

# compute the joint commands

joint_efforts = osc.compute(

jacobian_b=jacobian_b,

current_ee_pose_b=ee_pose_b,

current_ee_vel_b=ee_vel_b,

current_ee_force_b=ee_force_b,

mass_matrix=mass_matrix,

gravity=gravity,

current_joint_pos=joint_pos,

current_joint_vel=joint_vel,

nullspace_joint_pos_target=joint_centers,

)

The computed joint effort/torque targets can then be applied on the robot.

# apply actions

robot.set_joint_effort_target(joint_efforts, joint_ids=arm_joint_ids)

robot.write_data_to_sim()

The Code Execution#

You can now run the script and see the result:

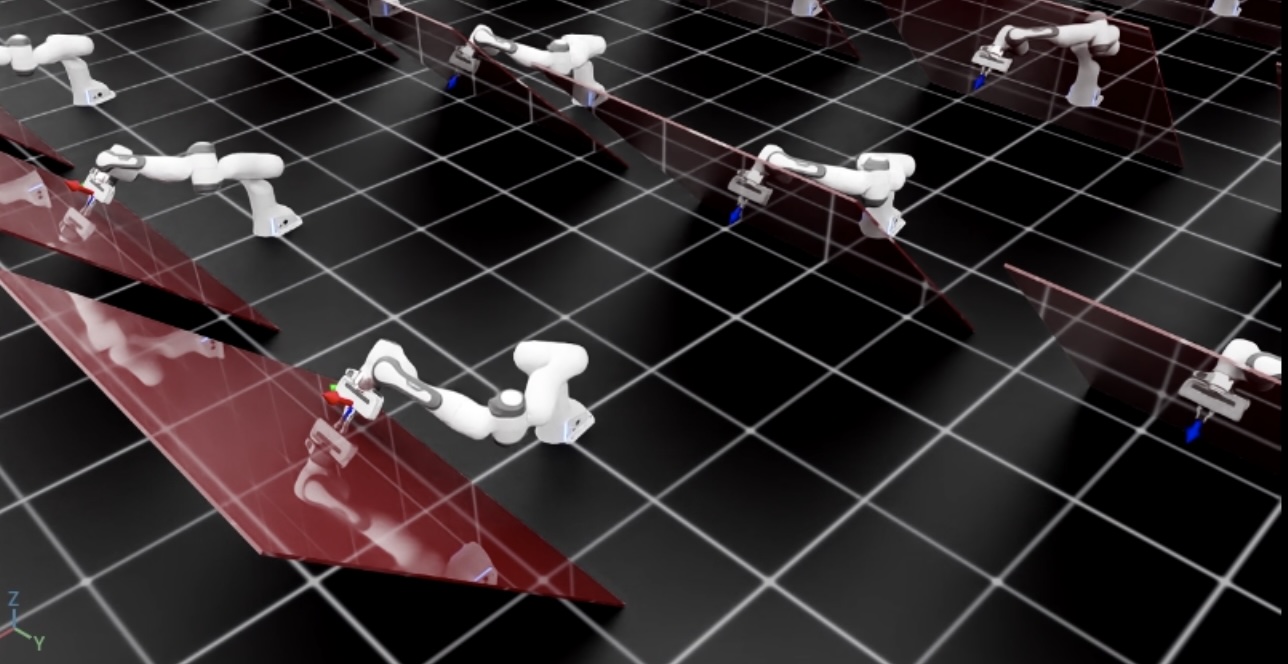

./isaaclab.sh -p scripts/tutorials/05_controllers/run_osc.py --num_envs 128

The script will start a simulation with 128 robots. The robots will be controlled using the OSC. The current and desired end-effector poses should be displayed using frame markers in addition to the red tilted wall. You should see that the robot reaches the desired pose while applying a constant force perpendicular to the wall surface.

To stop the simulation, you can either close the window or press Ctrl+C in the terminal.