Ray Caster#

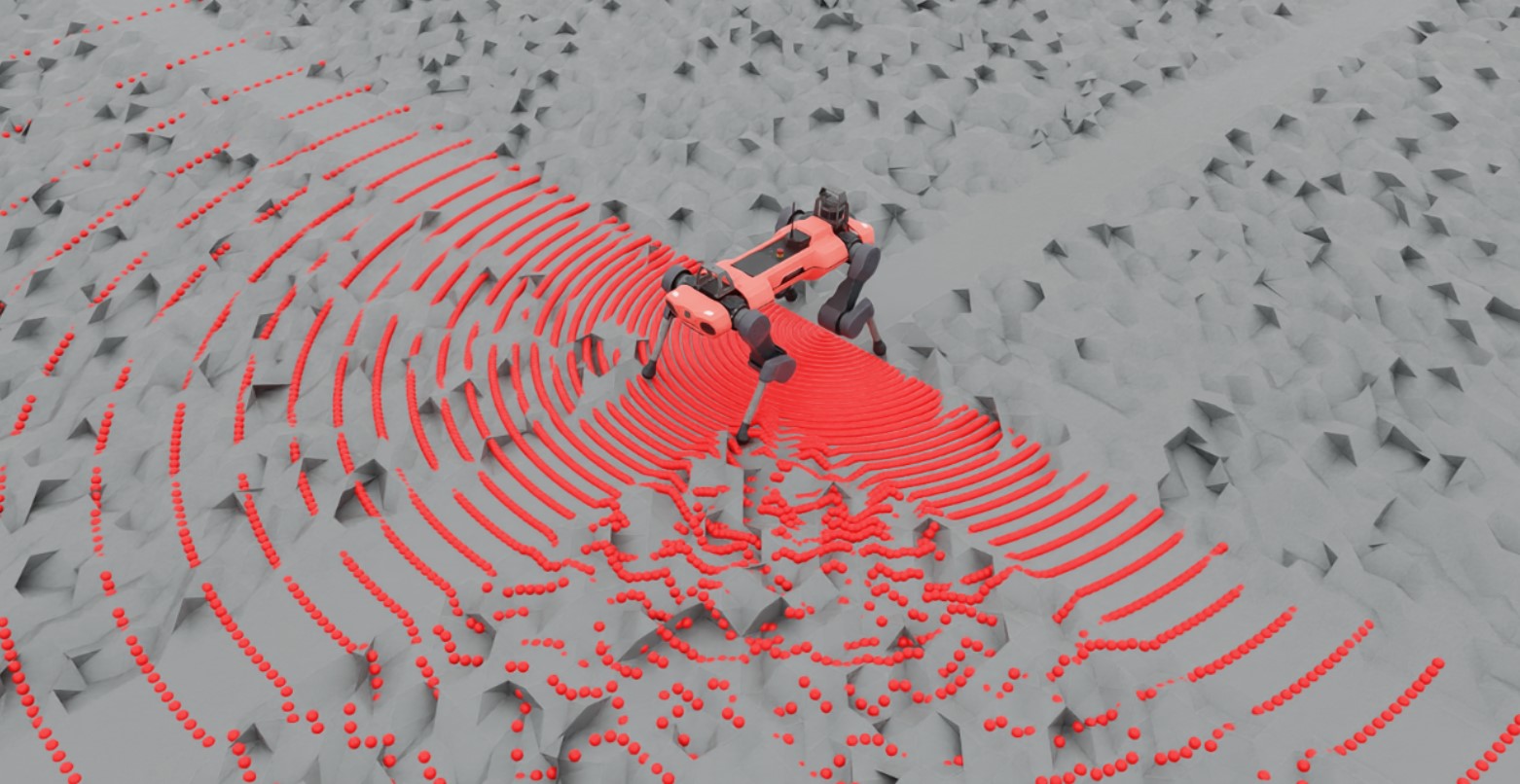

The Ray Caster sensor (and the ray caster camera) are similar to RTX based rendering in that they both involve casting rays. The difference here is that the rays cast by the Ray Caster sensor return strictly collision information along the cast, and the direction of each individual ray can be specified. They do not bounce, nor are they affected by things like materials or opacity. For each ray specified by the sensor, a line is traced along the path of the ray and the location of first collision with the specified mesh is returned. This is the method used by some of our quadruped examples to measure the local height field.

To keep the sensor performant when there are many cloned environments, the line tracing is done directly in Warp. This is the reason why specific meshes need to be identified to cast against: that mesh data is loaded onto the device by warp when the sensor is initialized. As a consequence, the current iteration of this sensor only works for literally static meshes (meshes that are not changed from the defaults specified in their USD file). This constraint will be removed in future releases.

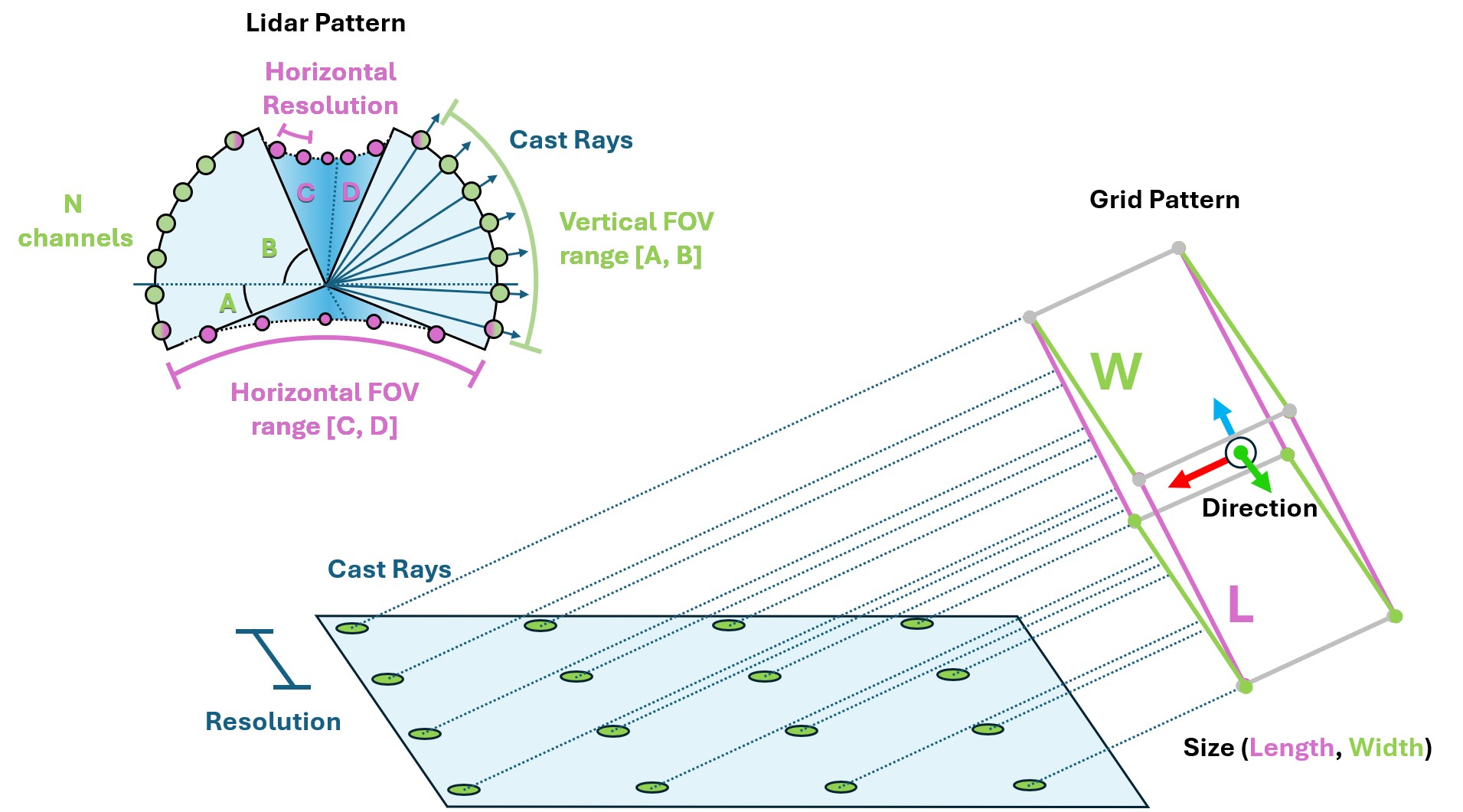

Using a ray caster sensor requires a pattern and a parent xform to be attached to. The pattern defines how the rays are cast, while the prim properties defines the orientation and position of the sensor (additional offsets can be specified for more exact placement). Isaac Lab supports a number of ray casting pattern configurations, including a generic LIDAR and grid pattern.

@configclass

class RaycasterSensorSceneCfg(InteractiveSceneCfg):

"""Design the scene with sensors on the robot."""

# ground plane

ground = AssetBaseCfg(

prim_path="/World/Ground",

spawn=sim_utils.UsdFileCfg(

usd_path=f"{ISAAC_NUCLEUS_DIR}/Environments/Terrains/rough_plane.usd",

scale=(1, 1, 1),

),

)

# lights

dome_light = AssetBaseCfg(

prim_path="/World/Light", spawn=sim_utils.DomeLightCfg(intensity=3000.0, color=(0.75, 0.75, 0.75))

)

# robot

robot = ANYMAL_C_CFG.replace(prim_path="{ENV_REGEX_NS}/Robot")

ray_caster = RayCasterCfg(

prim_path="{ENV_REGEX_NS}/Robot/base/lidar_cage",

update_period=1 / 60,

offset=RayCasterCfg.OffsetCfg(pos=(0, 0, 0.5)),

mesh_prim_paths=["/World/Ground"],

attach_yaw_only=True,

pattern_cfg=patterns.LidarPatternCfg(

channels=100, vertical_fov_range=[-90, 90], horizontal_fov_range=[-90, 90], horizontal_res=1.0

),

debug_vis=not args_cli.headless,

)

Notice that the units on the pattern config is in degrees! Also, we enable visualization here to explicitly show the pattern in the rendering, but this is not required and should be disabled for performance tuning.

Querying the sensor for data can be done at simulation run time like any other sensor.

def run_simulator(sim: sim_utils.SimulationContext, scene: InteractiveScene):

.

.

.

# Simulate physics

while simulation_app.is_running():

.

.

.

# print information from the sensors

print("-------------------------------")

print(scene["ray_caster"])

print("Ray cast hit results: ", scene["ray_caster"].data.ray_hits_w)

-------------------------------

Ray-caster @ '/World/envs/env_.*/Robot/base/lidar_cage':

view type : <class 'isaacsim.core.prims.xform_prim.XFormPrim'>

update period (s) : 0.016666666666666666

number of meshes : 1

number of sensors : 1

number of rays/sensor: 18000

total number of rays : 18000

Ray cast hit results: tensor([[[-0.3698, 0.0357, 0.0000],

[-0.3698, 0.0357, 0.0000],

[-0.3698, 0.0357, 0.0000],

...,

[ inf, inf, inf],

[ inf, inf, inf],

[ inf, inf, inf]]], device='cuda:0')

-------------------------------

Here we can see the data returned by the sensor itself. Notice first that there are 3 closed brackets at the beginning and the end: this is because the data returned is batched by the number of sensors. The ray cast pattern itself has also been flattened, and so the dimensions of the array are [N, B, 3] where N is the number of sensors, B is the number of cast rays in the pattern, and 3 is the dimension of the casting space. Finally, notice that the first several values in this casting pattern are the same: this is because the lidar pattern is spherical and we have specified our FOV to be hemispherical, which includes the poles. In this configuration, the “flattening pattern” becomes apparent: the first 180 entries will be the same because it’s the bottom pole of this hemisphere, and there will be 180 of them because our horizontal FOV is 180 degrees with a resolution of 1 degree.

You can use this script to experiment with pattern configurations and build an intuition about how the data is stored by altering the triggered variable on line 81.

Code for raycaster_sensor.py

1# Copyright (c) 2022-2025, The Isaac Lab Project Developers.

2# All rights reserved.

3#

4# SPDX-License-Identifier: BSD-3-Clause

5

6import argparse

7import numpy as np

8

9from isaaclab.app import AppLauncher

10

11# add argparse arguments

12parser = argparse.ArgumentParser(description="Tutorial on adding sensors on a robot.")

13parser.add_argument("--num_envs", type=int, default=1, help="Number of environments to spawn.")

14# append AppLauncher cli args

15AppLauncher.add_app_launcher_args(parser)

16# parse the arguments

17args_cli = parser.parse_args()

18

19# launch omniverse app

20app_launcher = AppLauncher(args_cli)

21simulation_app = app_launcher.app

22

23"""Rest everything follows."""

24

25import torch

26

27import isaaclab.sim as sim_utils

28from isaaclab.assets import AssetBaseCfg

29from isaaclab.scene import InteractiveScene, InteractiveSceneCfg

30from isaaclab.sensors.ray_caster import RayCasterCfg, patterns

31from isaaclab.utils import configclass

32from isaaclab.utils.assets import ISAAC_NUCLEUS_DIR

33

34##

35# Pre-defined configs

36##

37from isaaclab_assets.robots.anymal import ANYMAL_C_CFG # isort: skip

38

39

40@configclass

41class RaycasterSensorSceneCfg(InteractiveSceneCfg):

42 """Design the scene with sensors on the robot."""

43

44 # ground plane

45 ground = AssetBaseCfg(

46 prim_path="/World/Ground",

47 spawn=sim_utils.UsdFileCfg(

48 usd_path=f"{ISAAC_NUCLEUS_DIR}/Environments/Terrains/rough_plane.usd",

49 scale=(1, 1, 1),

50 ),

51 )

52

53 # lights

54 dome_light = AssetBaseCfg(

55 prim_path="/World/Light", spawn=sim_utils.DomeLightCfg(intensity=3000.0, color=(0.75, 0.75, 0.75))

56 )

57

58 # robot

59 robot = ANYMAL_C_CFG.replace(prim_path="{ENV_REGEX_NS}/Robot")

60

61 ray_caster = RayCasterCfg(

62 prim_path="{ENV_REGEX_NS}/Robot/base/lidar_cage",

63 update_period=1 / 60,

64 offset=RayCasterCfg.OffsetCfg(pos=(0, 0, 0.5)),

65 mesh_prim_paths=["/World/Ground"],

66 attach_yaw_only=True,

67 pattern_cfg=patterns.LidarPatternCfg(

68 channels=100, vertical_fov_range=[-90, 90], horizontal_fov_range=[-90, 90], horizontal_res=1.0

69 ),

70 debug_vis=not args_cli.headless,

71 )

72

73

74def run_simulator(sim: sim_utils.SimulationContext, scene: InteractiveScene):

75 """Run the simulator."""

76 # Define simulation stepping

77 sim_dt = sim.get_physics_dt()

78 sim_time = 0.0

79 count = 0

80

81 triggered = True

82 countdown = 42

83

84 # Simulate physics

85 while simulation_app.is_running():

86

87 if count % 500 == 0:

88 # reset counter

89 count = 0

90 # reset the scene entities

91 # root state

92 # we offset the root state by the origin since the states are written in simulation world frame

93 # if this is not done, then the robots will be spawned at the (0, 0, 0) of the simulation world

94 root_state = scene["robot"].data.default_root_state.clone()

95 root_state[:, :3] += scene.env_origins

96 scene["robot"].write_root_pose_to_sim(root_state[:, :7])

97 scene["robot"].write_root_velocity_to_sim(root_state[:, 7:])

98 # set joint positions with some noise

99 joint_pos, joint_vel = (

100 scene["robot"].data.default_joint_pos.clone(),

101 scene["robot"].data.default_joint_vel.clone(),

102 )

103 joint_pos += torch.rand_like(joint_pos) * 0.1

104 scene["robot"].write_joint_state_to_sim(joint_pos, joint_vel)

105 # clear internal buffers

106 scene.reset()

107 print("[INFO]: Resetting robot state...")

108 # Apply default actions to the robot

109 # -- generate actions/commands

110 targets = scene["robot"].data.default_joint_pos

111 # -- apply action to the robot

112 scene["robot"].set_joint_position_target(targets)

113 # -- write data to sim

114 scene.write_data_to_sim()

115 # perform step

116 sim.step()

117 # update sim-time

118 sim_time += sim_dt

119 count += 1

120 # update buffers

121 scene.update(sim_dt)

122

123 # print information from the sensors

124 print("-------------------------------")

125 print(scene["ray_caster"])

126 print("Ray cast hit results: ", scene["ray_caster"].data.ray_hits_w)

127

128 if not triggered:

129 if countdown > 0:

130 countdown -= 1

131 continue

132 data = scene["ray_caster"].data.ray_hits_w.cpu().numpy()

133 np.save("cast_data.npy", data)

134 triggered = True

135 else:

136 continue

137

138

139def main():

140 """Main function."""

141

142 # Initialize the simulation context

143 sim_cfg = sim_utils.SimulationCfg(dt=0.005, device=args_cli.device)

144 sim = sim_utils.SimulationContext(sim_cfg)

145 # Set main camera

146 sim.set_camera_view(eye=[3.5, 3.5, 3.5], target=[0.0, 0.0, 0.0])

147 # design scene

148 scene_cfg = RaycasterSensorSceneCfg(num_envs=args_cli.num_envs, env_spacing=2.0)

149 scene = InteractiveScene(scene_cfg)

150 # Play the simulator

151 sim.reset()

152 # Now we are ready!

153 print("[INFO]: Setup complete...")

154 # Run the simulator

155 run_simulator(sim, scene)

156

157

158if __name__ == "__main__":

159 # run the main function

160 main()

161 # close sim app

162 simulation_app.close()